We’ve now conducted (or are in the process of conducting) realist evaluations of five large, multi-year, multi-country programmes (BCURE, BRACED, Compass, WAFM and FoodTrade). It hasn’t always been an easy journey. Our evaluations are pushing the frontiers of the realist approach, with a larger scale and scope than most realist evaluations in progress, involving complicated multi-sectoral interventions with multiple levels of abstraction. Some of the challenges, opportunities and lessons learned were documented by the BCURE team in a CDI practice paper in 2016 – this has been downloaded over 4000 times, so we know we aren’t alone in wanting practical and accessible advice!

A few years further down the realist road, a group of Itad staff and associate consultants working on these five projects have formed a Realist Evaluation Learning Group, to share and capture what we’ve learned along the way. Over the next few months, we’ll be publishing a series of blogs sharing our reflections, working towards a paper or series of learning briefs later in the year (TBC!). Whether you’re an evaluator or a commissioner, completely new to the approach or wrestling with your own realist evaluation in progress, we’d love you to join the conversation by emailing us directly (at realism@itad.com) or commenting on our blogs.

So what is realist evaluation?

Realist evaluation doesn’t ask ‘what works?’ but rather ‘what works, for whom, and in what circumstances?’ A big part of its appeal is that it engages with complexity, recognising that programmes don’t work in the same way for everyone, everywhere. It does this through systematically developing and testing theory, about the causal mechanisms that programmes ‘spark’ (or fail to spark) in different contexts, to lead to different outcomes. For more of an introduction, check out Gill Westhorp’s excellent short intro paper here.

Is it worth doing a realist evaluation?

We sometimes get asked: is realist evaluation worth it? What value does it add over and above more ‘standard’ non-experimental evaluations, which often use theories of change to investigate whether and why a programme worked?

It’s an important question because realist evaluation is quite far from ‘business as usual’ in evaluation practice. It requires a specific way of thinking, theorising, interviewing, coding and analysing (based on an underlying realist philosophy), ideally through an iterative approach involving multiple rounds of data collection. This can require significant capacity building within research teams (which has its own challenges – the subject of a future blog!). It can also be fairly resource-intensive.

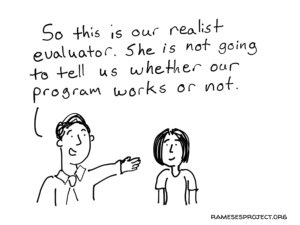

Nonetheless, our group’s qualified answer to this question is ‘yes’ – realist evaluation is worth it, as long as it’s chosen in appropriate situations (again, more on this in a future blog…) applied thoughtfully, and commissioners understand that it won’t give simple answers about ‘what works.’ Rather, it will generate rather more nuanced insights along the lines of (in the words of Pawson and Tilley 2004) ‘remember A’, ‘beware of B’, ‘take care of C’, ‘D can result in both E and F’, ‘Gs and Hs are likely to interpret I quite differently’, ‘if you try J make sure that K has also been considered.’

Here are three reasons why we think realist evaluation adds value:

- It requires drilling down into what exactly it is about a programme that generates change, digging beneath the surface of the intervention to find the ‘mechanism’ at work. (A ‘mechanism’ is a causal process that generates change. So a training course is not a mechanism…the mechanism is the ‘thing’ that explains why training changes or does not change behaviour in a particular setting). Identifying and investigating mechanisms forces us to clarify interventions and processes that may be fuzzy or understood quite differently by different stakeholders. Theseis include challengings concepts that it’s hard to be precise about – such as resilience (in BRACED), capacity, and evidence-informed policy making (in BCURE). It also forces us to unpack interventions – what does ‘training’ actually involve; what is really meant by ‘technical support’? This can be very valuable in large, multi-stakeholder programmes where the same label might mask entirely different ways of working by different partners.

- Many non-experimental evaluations use a theory of change to structure the enquiry, but realist evaluation formalises the theory building and testing – helping make this enquiry more systematic and rigorous. It provides a framework to develop, test and refine theory (through unpacking the mechanisms that ‘spark’ in different contexts to lead to differential outcomes – the infamous ‘CMO Configuration’), helping provide a depth to the analysis that in our experience you often don’t get in other evaluations built around theories of change. Developing CMOs requires the researchers to be very disciplined, clear and precise – there’s no getting away with fuzzy generalities.

- Realist evaluation emphasises ‘standing on the shoulders of giants’ – recognising that there is no such thing as a unique programme. The theory underpinning your programme will almost certainly have been tested in the past (maybe in a different field), and doing realist evaluation means building on these theories rather than starting from scratch every time, through seeking out, linking to and grounding insights in the literature. This is not always easy, especially in programmes like BCURE that span multiple fields from evidence-informed policy to political science to organisational change. However, the BCURE team found that grounding our theories in the literature added a depth (and credibility) to the analysis we would not otherwise have had.

What do you think – is realist evaluation worth it? In our next blog, we’ll explore whether realist evaluation requires us to be ‘purists’, or whether it makes sense to mix realist evaluation with other evaluation approaches and methods (e.g. contribution analysis).

Realist Evaluation Learning Group, May 2018