During the impact evaluation of the Millennium Villages project in northern Ghana, we opened up our datasets for wider scrutiny. It was an arduous and often painful journey, taking years just to make small steps forward. On reflection, I do often wonder whether it was worth it. Read on for our story, and why in the end I’d probably do it again!

While the data revolution is a sign of our times, the evaluation profession has in general been slow to respond. Few evaluations in international development routinely make use of ‘big data’ and fewer still make their datasets open access. Six years ago, DFID and ourselves struggled to find examples where evaluators had achieved open data access, despite it being commonplace in many research fields.

The importance of independence and transparency

When we started the evaluation back in 2012, the Millennium Villages approach was at the height of its controversy. We knew then that it was never going to be enough to simply present the evidence (however rigorously analysed). To be credible we knew it mattered to be perceived as independent (with no agenda to pursue) and transparent (so that others could re-analyse our work and reach their own conclusions)1. Thus, our evaluation was conducted independently from the project’s implementation team, and our datasets were made widely available. There was even a ministerial commitment for the evaluation be open access.

Delivering on this commitment, however, was far less straightforward in practice. The different legal, technical and ethical interests of our client and the project implementors were a challenge to navigate as we tried to balance data access with reducing disclosure risk: a fundamental trade-off between the ‘social benefit’ of making data available for re-analysis while also protecting ‘individual rights’ to anonymity. To illustrate the scale of the challenge, it took us over a year to negotiate an acceptable form of data access in compliance with Columbia University’s Institutional Review Board. The resulting agreement means that the household dataset is now held by the UK Data Archive (UKDA), and is accessible by application, under licence.

Beyond data archiving

While the evaluation dataset is in principle widely accessible, it isn’t totally open access (as one still needs to apply and gain approval to use it). It’s not simply a case of downloading the data. This creates many barriers and the consequence is that just a handful of people have sought access in this way. To further increase access, we also worked closely with UKDA to produce a second (redacted) dataset. This is available without seeking approval, yet there remain some barriers to access and uptake so far has been low.

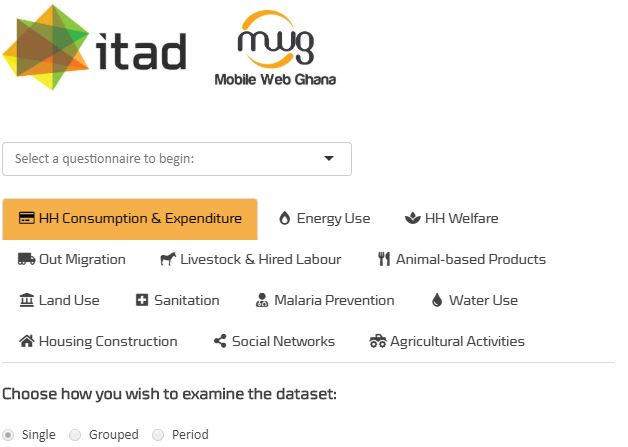

So late last year, facilitated by international development technology consultancy, We Are Potential, we collaborated with Ghanaian software developers, Mobile Web Ghana, to  produce a data visualisation app that provides a simple and engaging way to explore the datasets. The app allows you to explore the household datasets and visualise different comparisons, without the need to apply for a licence. While it doesn’t give access to the raw data, it provides another entry point to explore what’s there and see what might be possible. We’re hoping that this will increase awareness and stimulate more demand for the data.

produce a data visualisation app that provides a simple and engaging way to explore the datasets. The app allows you to explore the household datasets and visualise different comparisons, without the need to apply for a licence. While it doesn’t give access to the raw data, it provides another entry point to explore what’s there and see what might be possible. We’re hoping that this will increase awareness and stimulate more demand for the data.

Take a look at the app and see what you think.

Was it worth it?

Well, yes and no.

Yes, because it has certainly made the evaluation more credible: in principle, anyone can now re-analyse the dataset and reach their own conclusions. This is ‘good science’ and more than that it seems more democratic: laying bare our evidence to (potential) contestation and challenge while downplaying our role as the only ‘experts’ and arbitrators of the evidence.

No, in terms of few people having (so far) accessed the data! I do wonder if evaluation datasets are different and have more limited appeal. Unlike big data (such as from social media) or national surveys (like the Ghana Living Standards Survey), our dataset was deliberately sampled to be representative of the project population versus a matched comparison group. And while seemingly useful for other purposes, this does limit how useful it is more generally.

So, what do I conclude?

Well, data access certainly helps drive up quality. I’m convinced that simply knowing that the dataset may be subject to external scrutiny increased the attention on data collection. Plus, I’d strongly argue that it helped with our credibility by shifting the attention away from the evaluators being the expert to us supporting others to reach their own conclusions about what worked and why.

In practice, however, it seems that evaluators have a long way to go before we see a data revolution around open access. There is a rich vein of opportunity in making evaluations more engaging, and specifically in finding ways to open up our evidence base for others to engage – not just data analysts, but in some cases, commissioners, implementors and civil society more broadly. It may take years, even decades, but the collective effort will be worth it if not only we see better evaluations but also more transparent ones.

Have you got views on making data more open access for evaluation? Let us know by leaving your comments below.