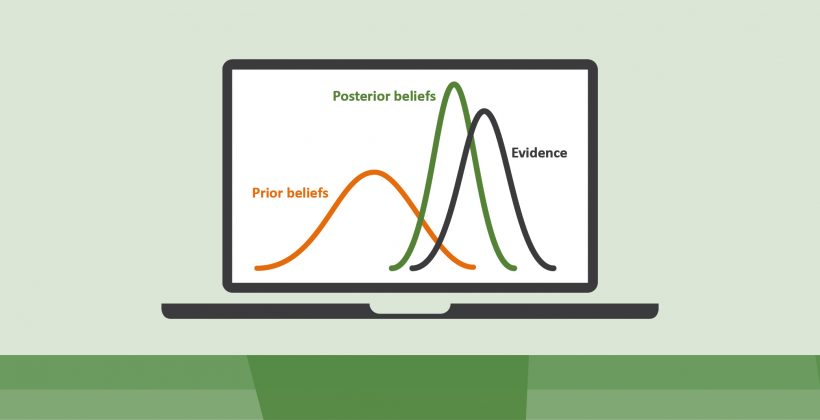

As laid out in the recent Itad brief, ‘Contribution Analysis and Bayesian Confidence Updating’, Bayesian Confidence Updating is a technique for process tracing—a qualitative, theory-based evaluation approach. As the name suggests, process tracing ‘traces’ the steps between a hypothesised cause and its outcome. Bayesian Confidence Updating is a very systematised technique for doing this.

We can think of Bayesian Confidence Updating (or Bayesian updating) as a technique for weighing evidence like that used in a court of law—we are focusing on the probability of a piece of evidence proving or disproving a hypothesis. This is different from looking for an amount of evidence, where more evidence means a hypothesis is stronger—an approach that I was more accustomed to prior to my experience with applying Bayesian updating.

From July, 2021-April, 2022, we (I and other members of the Itad project team) applied Bayesian updating as part of an Itad study of British International Investment’s (BII) mobilisation of private sector investment in international projects. (BII is the UK Government’s development finance institution). Here are some lessons we learned from this experience.

Lesson 1: Don’t begin with too many hypotheses!

One of the first steps in Bayesian updating is to develop testable hypotheses (or ‘causal claims’). In our case, after having read BII documentation and conducting an initial interview with BII staff, we developed causal claims about how BII’s investments might have contributed to investment mobilisation. In Bayesian updating, hypotheses need to be precise in order to be testable. For this reason, we initially drafted multiple versions of similar hypotheses because we wanted to differentiate between whether BII’s role was ‘influential’ or ‘critical’ in another actor’s (let’s call them ‘ABC’) decision to invest—where ‘critical’ means they would not have invested without BII’s involvement. This resulted in many pairs of hypotheses like the one below:

Hypothesis A1: ‘BII carried out activity X, and this was influential in actor ABC’s decision to invest.’

Hypothesis A2: ‘BII carried out activity X, and this was critical in actor ABC’s decision to invest.’

It is easier to prove BII’s role was influential rather than critical, because BII might have been one of many factors influencing ABC’s investment decision. In testing Hypothesis A, one source (stakeholder ‘L’) might state BII’s role was critical (evidence of Hypothesis A2), while another source (a different type of stakeholder, ‘M’) might state BII’s role was influential (evidence of Hypothesis A1). If we continue to treat hypotheses A1 and A2 separately, we cannot state with confidence whether either hypothesis is true, and we might have to discard both (this also comes in to play in Lesson 3, below); however, if we only test A1 (influence), we can treat our evidence from source L as supporting A1 (at a minimum). In hindsight, I would rather only introduce a hypothesis about BII’s role being critical should we learn from both/all sources that this was the case, and then retroactively amend the hypothesis, replacing ‘influential’ with ‘critical’. I expand on our reasons for coming to this conclusion in the third lesson.

Lesson 2: Approach alternative hypotheses with caution

Our next step in Bayesian updating, after developing hypotheses about BII’s contribution to change, was to develop hypotheses about other factors (beyond BII’s role) that might have influenced other investors’ decisions to invest – an important component of contribution analysis. We called these ‘alternative hypotheses’. Initially, we phrased these hypotheses as mutually exclusive with the possibility of BII’s influence. Using an unnamed International Finance Institution (IFI) as an example:

Hypothesis A3: ‘IFI carried out activity X, and this was influential in actor ABC’s decision to invest, ABC’s decision was NOT influenced by BII.’

However, in the process of collecting evidence, we found multiple instances where the evidence might support the first half of an alternative hypothesis, e.g., ‘IFI carried out activity X, and this was influential in actor ABC’s decision to invest’, but not the second half, ‘ABC’s decision was NOT influenced by BII.’ In other words, we found that IFI and BII might have both influenced ABC’s decision to invest—these two factors were not mutually exclusive. Subsequently, we amended hypotheses like A3 to instead read:

Hypothesis A3.1: ‘IFI carried out activity X, and this was influential in actor ABC’s decision to invest.’

The second half of the hypothesis was not necessary, and can be tested separately, as we did through Hypothesis A1 above (‘BII carried out activity X, and this was influential in actor ABC’s decision to invest.’).

Lesson 3: As the final step – be sure to sense-check your findings

Jumping to the end: as the brief explains, the last step in Bayesian updating is calculating the probability a hypothesis is true—the resulting value is called the ‘posterior’. It is calculated using the evidence observed, and more specifically, the values for ‘Sensitivity’ and ‘Type 1 Error’ assigned to each type of evidence. Please see the brief for more on this, but in short,

- ‘Sensitivity’ values indicate the probability of finding the evidence if a hypothesis is true, and

- ‘Type 1 Error’ values indicate the probability of finding the evidence if a hypothesis is false.

It’s important to say that in Bayesian updating we assign the Sensitivity and Type 1 value before we start collecting evidence (ex-ante). This is a special feature of Bayesian updating because it helps to avoid an evaluator’s own confirmation bias from affecting the results.

After we have collected the evidence, we calculate the posterior value–we used a calculation tool designed by Barabra Befani (see the brief for the formulae). Posterior values fall in a range from 0.01 to 0.99, where

- a value closer to 0.99 indicates a higher level of confidence that a hypothesis is true, and

- a value closer to 0.01 indicates a higher level of confidence a hypothesis is false, and

- a value of 0.5 indicates no confidence in whether the hypothesis is true or false.

After calculating the posterior, we needed to sense check the value to see if it chimed with what we understood to be true based on the interviews we conducted and the documents we read.

Now bear with me for a mind-bending explanation of our technical approach in the example below!

Let’s assume we kept the two hypotheses from above: A1 (‘BII…was influential in actor ABC’s decision to invest.’) and A2 (‘BII…was critical in actor ABC’s decision to invest.’). Then we would use evidence from source ‘M’ in support of A1 (M stated BII was influential) and evidence from source ‘L’ in support of A2 (L stated BII’s role was critical). Conversely, evidence from ‘M’ weakens hypothesis A2 (it appears to refute it), and evidence from ‘L’ weakens hypothesis A1. In this scenario we found both hypotheses had posteriors of 0.27, indicating a high level of confidence the hypotheses are false. Although we followed the correct technical approach, logically, this would seem to contradict our evidence from source ‘L’ and source ‘M’ that BII did have an effect on ABC’s investment decision, whether ‘influential’ or ‘critical’. It doesn’t make sense to report hypotheses A1 and A2 as false.

Instead, it is better to use just one hypothesis, and we would choose to use A1 rather than A2, because the evidence ‘threshold’ for proving BII’s influence is lower than the threshold for proving its role was critical. We would then use both pieces of evidence, from sources ‘L’ and ‘M’, to calculate the posterior for A1. This results in a new posterior of 0.76, a high level of confidence that hypothesis A1 is true. This is more logical, based on the evidence we collected. In fact, this sense-checking process was one of the reasons we arrived at Lesson 1—that we had too many hypotheses.

Take a pragmatic approach to a complicated technique

Although the steps in Bayesian updating are described in a linear process (and this is the order in which I’ve presented the three lessons), in its application, we found we often needed to revisit earlier steps, and hypotheses in particular, to make the process work for us. We needed to make sure that valid evidence could still be used for learning, rather than rejecting evidence or hypotheses in a ‘computer says no’ type of response (Lesson 3). This is especially important because, unlike in an evaluation approach that might rely on many dozens or hundreds of data points, Bayesian updating–a systematic technique for weighing evidence ex-ante to avoid confirmation bias and to then test confidence in hypotheses–can be carried out with relatively few data points. We needed to make the most of the data that we did have. This necessitated a return to the hypothesise to rationalise them (Lesson 1). It was also only after the process of data collection that we realised that some of our ‘alternative’ hypotheses were not as alternative as we thought, but actually able to sit side-by-side with our original hypothesis (Lesson 2).

Jessica Rust-Smith is and Independent Consultant in M&E for Private Sector Development and a Case Study Evaluator in the BII Longitudinal Mobilisation Study (https://www.itad.com/project/cdc-longitudinal-study/)