With family planning funding under increasing pressure, decision-makers need low-cost, credible ways to demonstrate the impact of their programmes.

Traditional impact evaluations using household surveys can cost thousands or even millions of dollars. In contrast, data from HMIS is already collected routinely from health facilities and therefore offers a tempting alternative.

But can HMIS data be used to deliver rigorous impact estimates? And could automation make it nearly free? Itad’s recent evaluation of The Challenge Initiative (TCI) was designed to test exactly this.

About The Challenge Initiative

TCI supports 214 local governments (LGs) across 13 countries to scale up high-impact family planning interventions, from immediate postpartum family planning and mass media campaigns to community health worker outreach. TCI aims to strengthen government stewardship, build self-reliance, and increase modern contraceptive use amongst the urban poor.

Can HMIS data be used for impact evaluations?

Traditionally, HMIS data has been primarily used for programme monitoring and management, not for estimating population-level outcomes. It has been historically underutilised due to significant data quality concerns. Unlike household surveys, HMIS data comes from facilities rather than representative population samples, making it challenging to estimate population-level contraceptive uptake.

However, in the context of financial constraints, the appeal of HMIS data for evaluation is obvious: it is already collected, at high frequency, and is far cheaper than primary data collection.

Therefore, in 2021, TCI asked Itad to find out if HMIS data could produce credible impact estimates using Interrupted Time Series (ITS) analysis – a research design used to evaluate intervention effectiveness.

Putting HMIS to the test

To test the potential of HMIS data, we undertook a rigorous but resource-intensive analysis approach. This involved data quality assessments, intensive data cleaning, context analysis and expert interpretation and validation.

The results were striking. Across 40 LGs in seven countries, our HMIS-based estimates tracked closely with previous independent evaluations of TCI. This demonstrates that carefully cleaned HMIS data can produce credible impact estimates comparable to expensive data collection methods at a fraction of the cost.

However, despite robust results, there was a catch. Our approach still involved substantial researcher involvement and required significant time and resources. With budgets constrained, we were curious: could we automate the entire analysis process and make impact evaluation almost free?

Testing automation: a head-to-head comparison

We compared the resource-intensive analysis approach with a low-cost automated approach involving minimal researcher involvement.

We built a fully automated ITS approach that required virtually no researcher involvement – just code, computers and the health data. We then compared this to our resource-intensive approach to understand the trade-offs between affordability and analytical rigour.

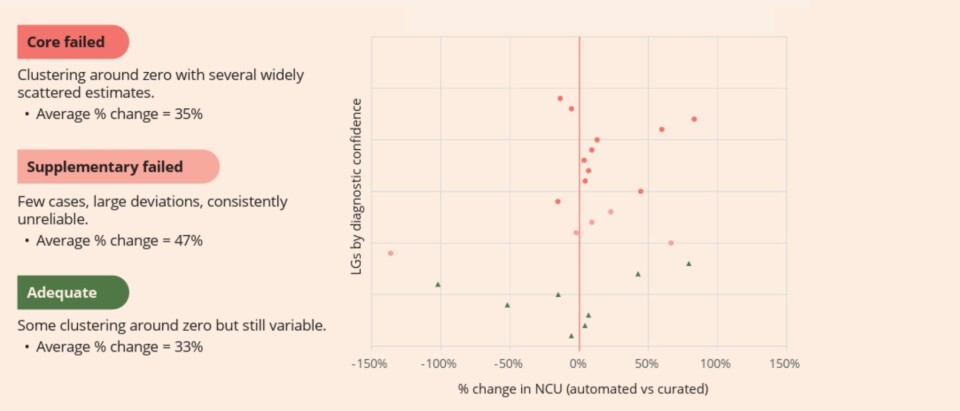

We tested both approaches using data from 28 LGs. For each location, we examined the relative (%) and absolute change in the number of clients generated by the automated and curated approaches. The goal was not simply to compare the numbers, but to understand how much confidence decision-makers can reasonably place in a low-cost automated approach.

So, what did we find?

Despite TCI’s various investments in strengthening HMIS data quality and our stringent inclusion criteria, the automated approach showed an average difference of 34% compared to results achieved through the resource-intensive approach (see Fig.1 below). In some LGs, the divergence was even greater. Crucially, this overestimation persisted even in cases when the automated approach appeared to be “performing well” based on the team’s diagnostic checks.

This is concerning because inflated impact estimates can mislead decision makers, distort resource allocation, and undermine programme credibility. Ultimately, this means that without human involvement in cleaning, verifying and interpreting data, automated HMIS-based ITS models cannot generate impact estimates to inform decision making.

Fig 1. NCU change by automated diagnostic (as a percentage)

But this doesn’t mean automation is useless

Although we found that fully automated approaches are not sufficiently reliable for decision-making, automated approaches are still valuable.

While individual LG estimates varied substantially from results achieved through the resource-intensive approach, aggregate portfolio-level estimates showed closer alignment. This suggests automated approaches may provide reasonable overall impact signals. By scanning at the portfolio level and identifying early insights and areas for deeper investigation, automation could therefore reduce the costs for programme managers.

What does this mean for family planning programmes?

Our work shows that HMIS data holds enormous potential for impact evaluation when paired with rigorous verification and context-sensitive analysis. Automated approaches cannot replace expert judgement and should not be used to drive major decisions. However, automated approaches can still be used to screen portfolios to flag areas for further enquiry.

Strategic use of both automated and resource-intensive approaches can therefore balance cost, scale, and rigour. It is not about choosing between the two, but about deploying both in the right combination. This will help to unlock smarter investments, stronger programmes and better outcomes for the communities that family planning interventions serve.