Evaluations can only support evidence-informed decision making if they provide the information needed by decision-makers. Generating the right information is not always easy but it is especially problematic with complex evaluations.

This week, in the BMJ Global Health journal, evaluators from Itad and KIT Royal Tropical Institute

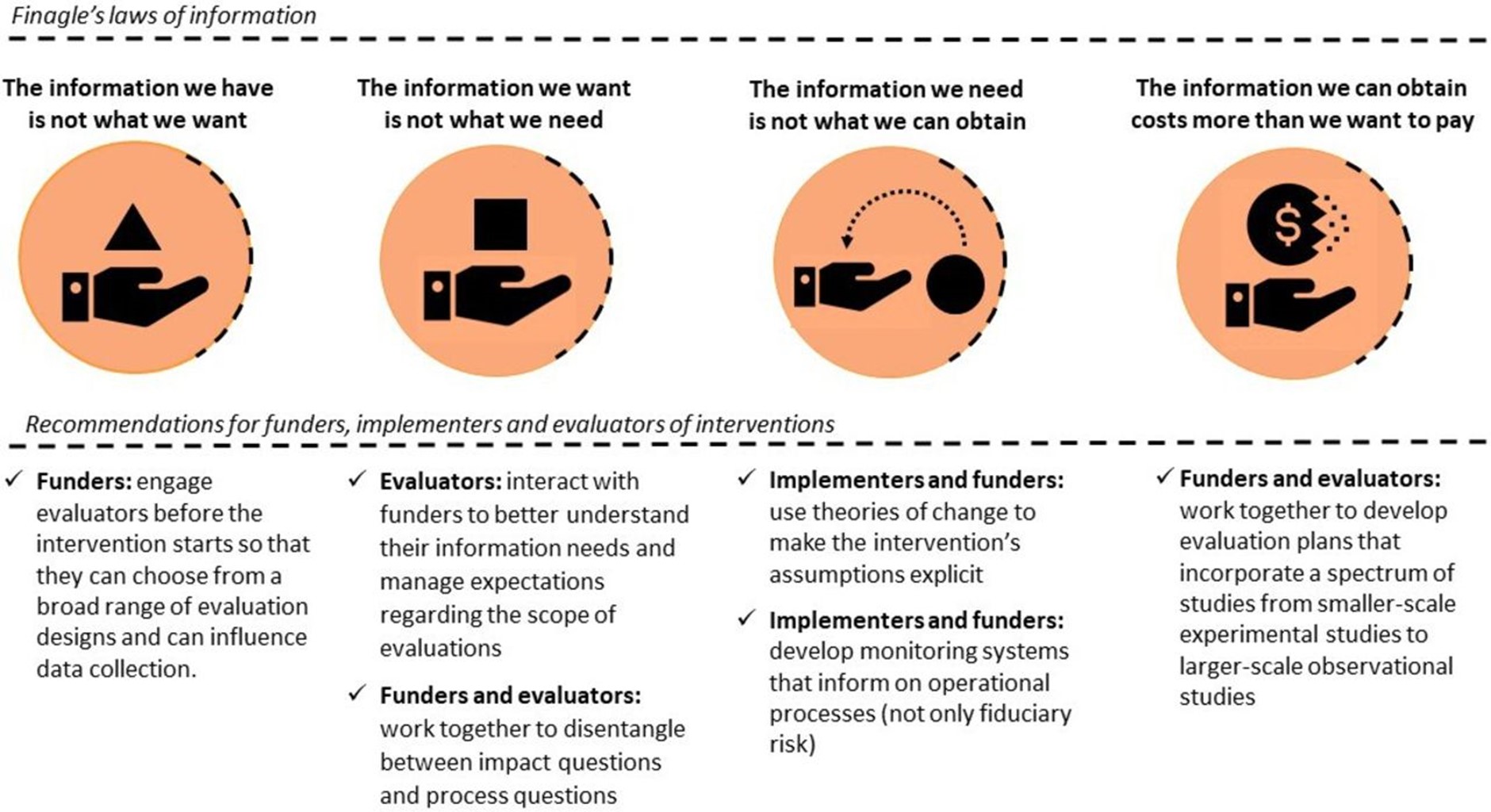

provide important recommendations for funders, implementors and evaluators on how to effectively evaluate complex interventions, based on Finagle’s laws of information.

The article highlights their difficulties evaluating an innovative programme which provides demographic and geographical information to support health service delivery. The programme, ‘Geo-Referenced Infrastructure and Demographic Data for Development (GRID3)’ was implemented in Nigeria’s northern states to support polio and measles immunisation campaigns. In 2019, Itad and KIT were commissioned by the Bill & Melinda Gates Foundation to evaluate GRID3’s use and impact between 2012 and 2019.

Recommendations to inform methods and judgements

Rigorous evaluations should inform evidence-informed practice in global health. Yet there are still many instances where the evidence produced by evaluators and researchers cannot support evidence-informed decision making because it fails to provide the information actually needed by decision makers.

Fingale’s law of information provides a useful lens through which to highlight potential areas in which evaluations can fail.

Co-author, Callum Taylor of Itad, said:

The GRID3 programme presented a series of interesting challenges to us as evaluators. We are pleased to have turned those challenges into lessons for supporting evidence-informed decision making through evaluations of complex systems.

Drawing on their experience, the authors recommend that:

- funders engage evaluators before the start of an evaluation to enable evaluators to influence study design and data collection;

- evaluators interact with funders to understand their information needs, manage expectations regarding the scope of evaluations, and disentangle between impact and process questions;

- implementers and funders use theories of change to make the intervention’s assumptions explicit and to develop monitoring systems that inform on operational processes (not only fiduciary risk); and

- funders and evaluators work together to develop evaluation plans that incorporate a spectrum of studies from smaller-scale experimental studies to larger-scale observational studies.

Co-author Sandra Alba of KIT Royal Tropical Institute, said:

Evaluating complex interventions is a complex endeavour by definition. By reflecting candidly on our own difficulties, we hope we can help funders, evaluators and implementers to work together maximise the usefulness of their own evaluations.

The article and its recommendations serve as a reminder that commissioners, evaluators and implementers share a joint responsibility for the success of an evaluation.