A few weeks ago, I attended a seminar on intervention logic and contribution analysis, organised by EuropeAid and delivered by Steve Montague. A lot of the seminar was based on Funnell and Roger’s Purposeful Program Theory, but it was nevertheless a useful refresher and generated some reflections on divisions in evaluation markets and how to better visualise intervention logics.

Two evaluation markets

The first thing that struck me when entering the room was the divide between EC and UK evaluation markets. Each market has their usual suspects, but there is very little overlap between the two markets – with essentially a different group of individuals and organisations participating in similar DFID events. While this phenomenon is known to most people in the sector, it made me wonder again to what extent it undermines knowledge sharing, learning and innovation.

A similar reflection fell out of a conversation I had with Steve Montague on Qualitative Comparative Analysis (QCA). Interestingly, he mentioned that the method is hardly used in North America, despite it being initially developed by Charles Ragin in the United States. This mirrors another divide in the evaluation market, the divide between Europeans and North Americans in their preferences for evaluation methods, with North Americans often favouring quantitative methods and Europeans promoting more qualitative methods (with of course a lot exceptions). This divide, in turn, is a consequence of diverging paths in social science research over the last decades, in particular in the fields of political science and sociology.

However, little is known about the extent to which such divisions in evaluation markets and other systemic factors can influence evaluation practice and uptake. The Centre for Development Impact (CDI), a joint initiative between Itad, IDS and the University of East Anglia, is starting to fill this gap in research with its new theme on ‘Improving the evaluation system to deliver impact’. A recent paper my colleague Rob Llloyd and I wrote on drivers of evaluation quality provided some first insights into this area, but much more needs to be done to better understand evaluation practice from a systems perspective. CDI will definitely be the space to watch for this.

Visualisations of complex intervention logics

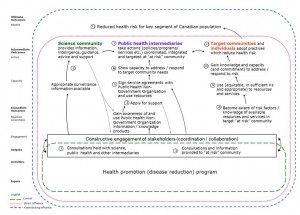

Apart from these reflections on divisions in the evaluation market, there were also a few interesting practical examples of intervention logics presented by Steve. For instance, Steve presented an example of a Theory of Change (ToC) inspired by thinking about spheres of control. In the figure below (click on it to zoom in), the green box represents the sphere of control, the red box the sphere of direct influence, and the blue box the sphere of indirect influence. While thinking in terms of spheres of control is nothing new and a key part of outcome mapping, I thought this was a good practical example of how to build a ToC upon this model, including feedback loops. The areas of change framework used in Itad’s ‘Building resilience and adaptation to climate extremes and disasters’ (BRACED) evaluation follows similar principles.

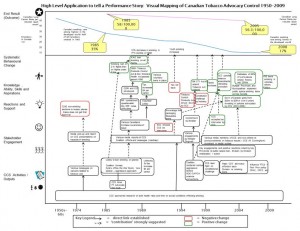

Steve also presented a practical example of how to visualise a complex change process and link it to quantitative outcome level results. In the figure below (click on it to zoom in), a complex tobacco advocacy campaign is linked to statistics on smoking rates and lung cancer rates. Again, this is not a new approach or innovation, but a useful practical example of how to visualise such change processes which could be used more often for evaluations.

In sum, the seminar showed me that there are easy ways to visualise ToCs much better than the unusual box-arrow-diagrams, if we just start thinking more outside the box as a profession. Perhaps more important, however, the seminar also confirmed for me again that there is a large divide in evaluation markets that is likely to impact upon evaluation quality and uptake. To move forward as a profession, more needs to be done to overcome this.

Florian Schatz, July 2015